▩▩▩▩▩▩▩ WEEK 9 ▩▩▩▩▩▩▩

Challenges with Ideation

Throughout my experiments, I've started to recognize the limitations of the TouchDesigner software. This

realization has led me to be more mindful of aligning my ideas with both the capabilities of the software

and my own skills.

As I've progressed, I've reconsidered my initial plan to create one prototype for each dilemma. I think

that I hadn't explicitly committed to this approach in my proposal. Therefore, I'm inclined to take a more

iterative path, focusing on developing a single prototype initially and gradually refining it. If the need

arises to create additional prototypes, I will do so at that stage.

On a positive note, after exploring numerous YouTube videos, I've finally found inspiration, and

interestingly, it came from Brad Troemel. In one of his 'CloutBombing' series, he emphasizes the

significance of representative diversity as a measure of content quality. Social media users seeking

engagement leverage this concept to ensure their content reaches a diverse audience.

My idea builds upon this concept: What if every content creator was required to meet a certain

representative diversity threshold for their content to receive higher rankings in recommendations? This

raises the intriguing question of how algorithms can oversee and implement such a curation process.

How then can I visually represent this using a designed outcome? Since my method hinges on speculative

prototyping, I was drawn towards creating this make-believe prototype, that will provoke critical thought

and spark discourse.

As I progressed in my learning of TouchDesigner, I became more acquainted with the software's limitations

and started envisioning potential approaches. Specifically, in the context of promoting racial diversity,

one idea is to incorporate face detection technology. This system would identify faces in an image,

convert them to grayscale, and calculate a median value. Then, 'if, else' functions could be used to

instruct the code to determine whether the image passes or fails the racial diversity check.

It's important to note that this prototype is deliberately biased, serving as a deliberate provocation to

prompt questions about how such a system might be exploited and what alternative solutions could be

proposed. This approach aligns perfectly with my overarching goal of fostering discourse and, through

that, nurturing collective algorithm literacy.

I'm genuinely enthusiastic about this idea because I perceive a wealth of potential for expansion and

ongoing iteration. The concept also offers numerous avenues for introducing interactivity, inviting

viewers to fully engage with this speculative scenario. This immersion can naturally lead to more profound

and meaningful discussions.

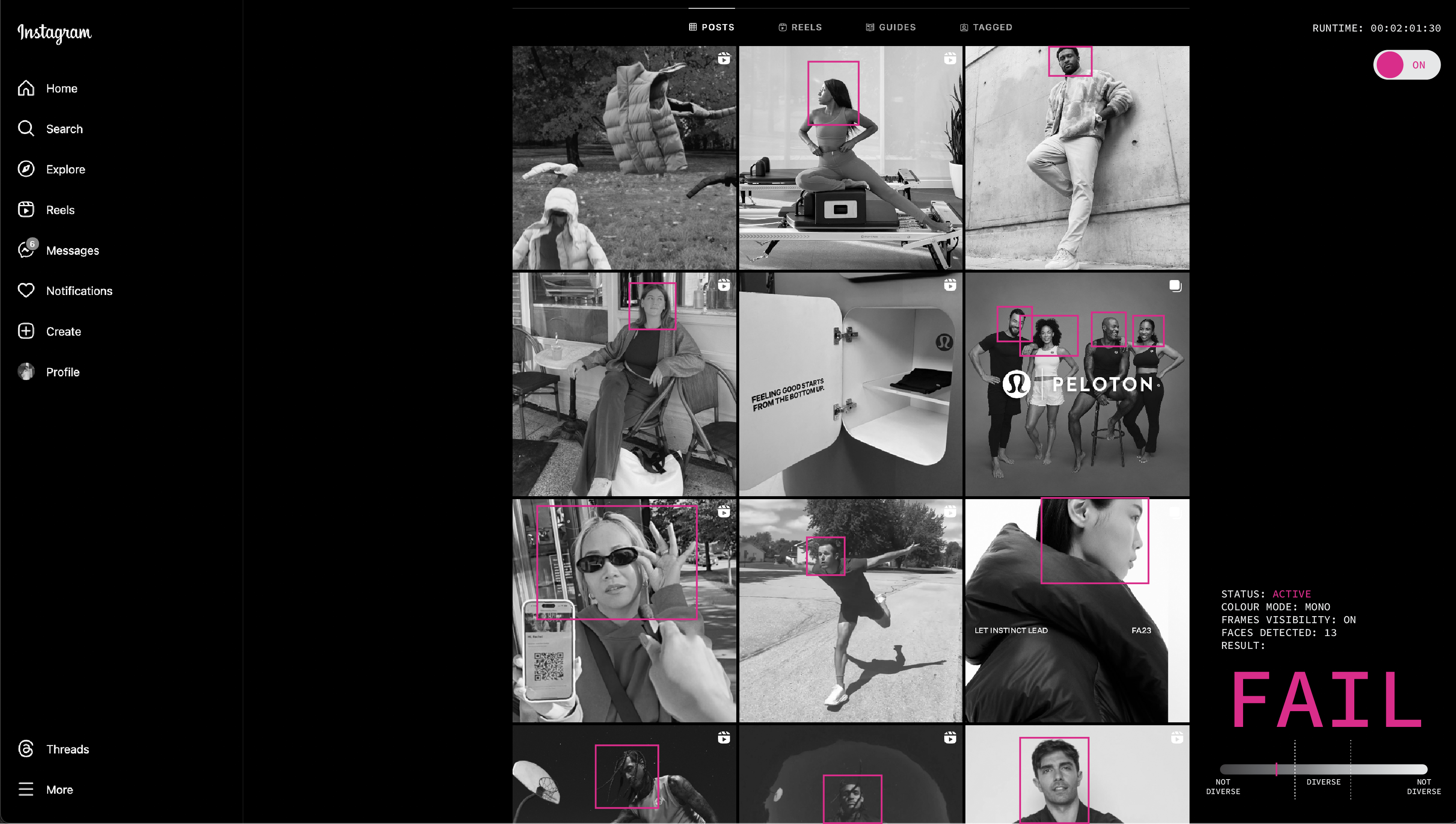

Visualisation of prototype UI

Draft of analysis sequence

First steps into prototyping (Multi Face Tracking + Screen Recording Input)

I recall encountering the concept of object detection and identification during my first year in CiD. At

that time, I had a limited grasp of how it functioned. Revisiting the topic now, my understanding remains

somewhat rudimentary. In essence, the process involves feeding image training datasets into algorithms,

labeling them, and then, through the machine learning process, the algorithms gain the capability to

identify objects based on the relationships within pixel data from neighboring elements.

I stumbled upon a tutorial on integrating OpenCV in TouchDesigner, and what initially seemed like a

16-minute follow-along video turned into hours due to the challenges I encountered. While I had previously

encountered tutorials involving the Python coding language in my experiments, this one introduced a new

layer by requiring me to import a script. Even though my code appeared to be identical to that in the

tutorial, it simply wasn't working.

After investing a considerable amount of time in troubleshooting, I eventually pinpointed the issue: it

stemmed from the way Windows and macOS handled file paths, which led to complications in importing my XML

file. To make matters more perplexing, copying and pasting the file path from 'get info' didn't resolve

the problem. This is when I reached out to my hero, my Dad, for assistance. Through a series of commands

in Terminal, he helped me retrieve the correct file path, and everything fell into place afterward. So

thankful for a code literate family.

In addition to the technical challenges, I wanted to introduce an interactive element into this prototype.

To achieve this, I configured my iPad as a secondary monitor and employed the 'screenGrab TOP' to set my

iPad screen as the primary video input. With this setup, I could easily scroll through images on my iPad

while observing the algorithm's functionality.

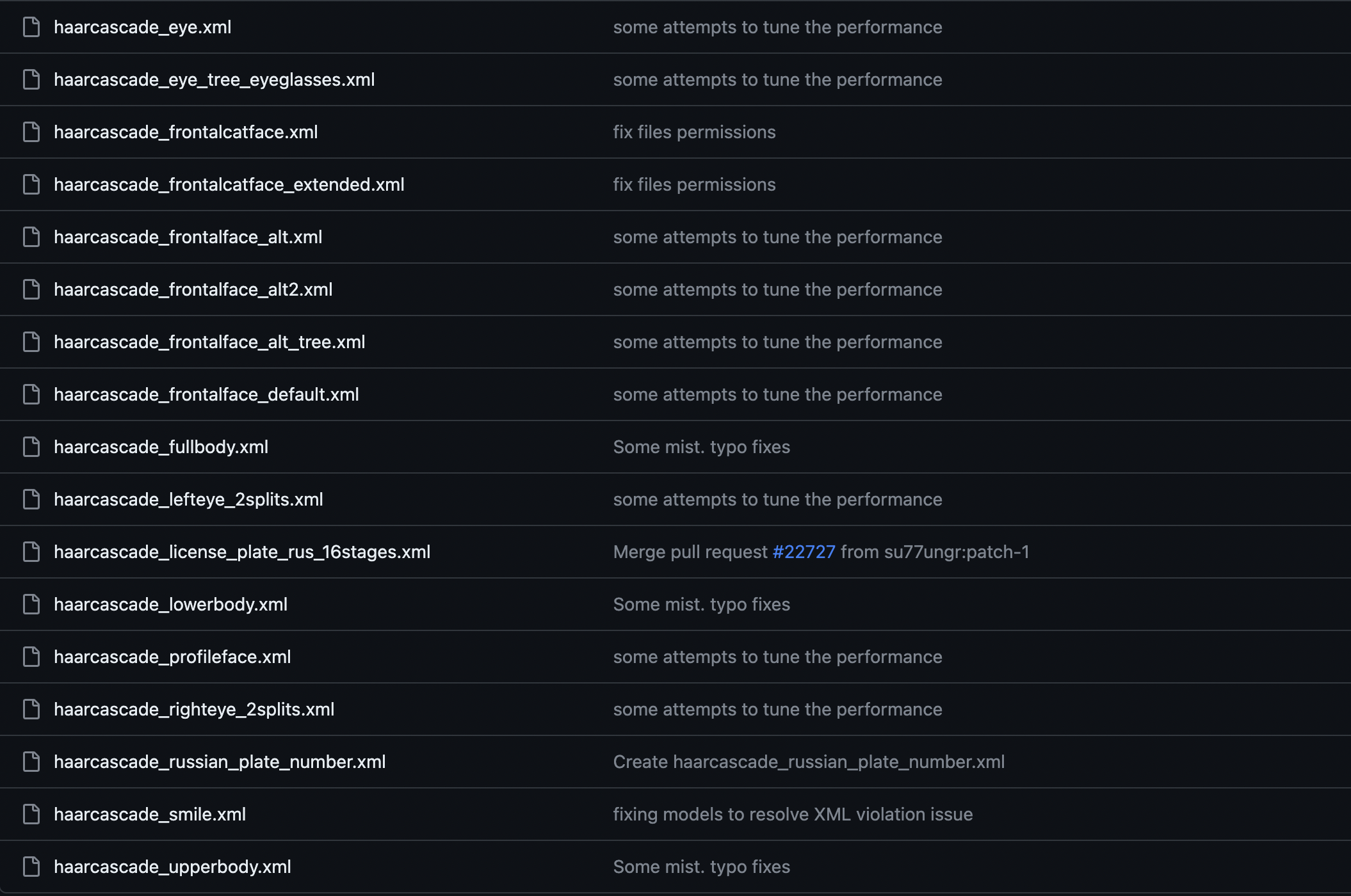

I observed a significant inaccuracy in the facial detection algorithm, prompting me to investigate the

OpenCV Haar Cascade algorithm. I discovered that this algorithm primarily identifies faces that are

oriented directly toward the camera and has limitations in detecting side profiles or faces below a

specific pixel size threshold. Within the Haar Cascade GitHub repository, I encountered alternative

scripts designed for specific types of detections, such as side profiles, full-body recognition, and eye

tracking. However, upon testing these scripts, I realized that they necessitated distinct code setups.

To validate these findings, I conducted further tests scrolling through a Google image search of faces on

my iPad. Even though the images met the minimum pixel size criteria for face detection, the results

remained inconsistent. Surprisingly, it failed to detect around 9 out of 10 faces I expected it to

identify. This discrepancy raised considerable concern, compelling me to explore alternative object

detection methods. Given the uncertainty about the search terminology and the need for expert guidance, I

decided to schedule a consultation with Andreas.

While awaiting a response, I opted to continue my experimentation with the face detection algorithm.

During my previous research material searches, I encountered numerous articles discussing the accusation

that face detection tools tend to exhibit bias. This bias can often be attributed to the absence of

diverse training data. Considering that the Haar Cascade detector I was using is nearly a decade old, it's

likely outdated and might produce results that could reflect the biases.

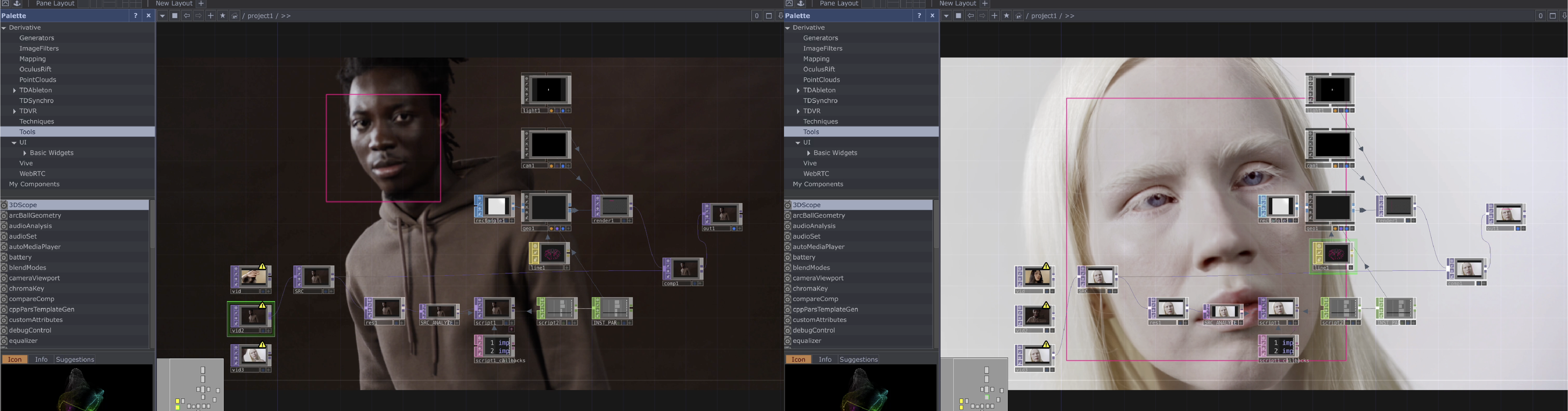

I successfully located a collection of videos featuring individuals with a range of skin tones. These

videos were all recorded within the same studio, under identical lighting conditions, and with backgrounds

that matched the respective individual's skin tone. To my surprise, the facial detection algorithm was

able to identify the faces in all of these videos. I had half-hoped for a failure in detection, as it

could have offered more value to my research (just kidding). Subsequently, I revisited the articles that

had previously raised concerns about bias in facial detection tools. It became clear that the accusations

of bias pertained to identification rather than mere detection.

In the next stages of my project, I'll need to figure out how to create an instance of the identified face

frame and extract its color values. While I'm confident that it's achievable, I need to explore the

methods to make it happen. I intend to discuss this particular challenge with Andreas during our upcoming

consultation.

Grabbing file path through Terminal

Feeding iPad screen as live input

Other alternative scripts

Testing facial detection on different skin tones

Catalogue of Making Update

As I looked through some of the past years’ graduating works and their catalogue of making, I started to

find it difficult to document my own experiments. This is probably because my experiments weren’t really

experiments, they were leaning more towards tutorial outcomes. The missing link lay in my struggle to

contextualize these outcomes within the framework of my research. Despite consciously selecting tutorials

that dealt with images, pixels, and data, their influence didn't manifest distinctly enough in the visual

results of my work.

Much of the process didn't yield visuals that significantly diverged from the final outcome. As a result,

my documentation mainly comprised screen captures depicting the interconnection of nodes. However, I found

this aspect of the process less visually engaging, which made it challenging to document effectively.

Furthermore, my journey to the experiment outcomes followed a notably different trajectory. Much of it was

shaped by serendipity, primarily due to my limited proficiency in TouchDesigner. Separating the 'building

up' phase from the final outcome was intricate, as the entire process involved multiple iterations, with

each new iteration building upon the previous one.

While I don't believe my approach is wrong, I personally prioritize documenting meaningful and

high-quality experiments over quantity. However, given the substantial amount of time invested in these

experiments, I was reluctant to let that effort go to waste.

To address this dilemma, one strategy I'm considering is revisiting the images I've used in my experiments

and finding ways to weave a narrative within the context of my research. For those experiments where

abstract visuals took precedence, I believe it would be more beneficial to explain the underlying

objectives, offering viewers some context to better understand the work.

Screen captures from Goh Sing Hong's FYP Documentation AY21/22

Crafting narratives, justifying outcomes

Rather than formulating vague or perplexing narratives, I took a more deliberate approach. I attempted to recollect what initially drew me to these tutorials and why I had chosen them. I reflected on how I perceived their relevance to my research at the time.

Through this method, I was able to craft descriptions that possessed greater depth and intention. It enabled me to gain a clearer understanding of my mindset during the experimentation process. I distinctly remember being highly discerning when it came to the selection of images. Whether it was to assess the compatibility of a particular effect with a specific type of image or driven by considerations of visual aesthetics, I could readily distinguish between these two distinct criteria.

Experiment C Description