▩▩▩▩▩▩▩ WEEK 12 ▩▩▩▩▩▩▩

Making a New Friend on Fiverr

As the stress of my non-functional prototype began to weigh on me, I decided to turn to Fiverr in search

of someone who could assist me in troubleshooting my code. I had previously sought help from various

individuals, only to realize that explaining my project, no matter how well I articulated it, proved to be

a significant challenge. To effectively communicate my needs, I had to understand every component within

my TouchDesigner patch, OpenCV, and all the lines of code I had written.

Initially, my intention was to hire someone to troubleshoot my patch and provide a comprehensive

explanation of how all the elements came together. The project was intricate and unconventional, involving

a combination of code and node-based design software. This complexity, coupled with my limited familiarity

with the coding language and software, as well as the various methods required to link them, left me

feeling overwhelmed.

Enter Stefan, my new friend from the Netherlands. After a brief description of my project, he generously

decided to assist me for free, recognizing the significance of my Final Year Project (FYP). What set him

apart from consulting with others, including Andreas, was his ability to invest time in understanding my

project. He asked pertinent questions, provided me with the precise tools necessary for my prototype

research, and offered valuable guidance.

Some YouTube tutorials were overly complex and demanded a certain level of prerequisite knowledge to

follow along. Typically, these tutorials didn't offer direct links to the required background materials.

However, Stefan came to the rescue by helping me pinpoint the prerequisites, supplying the relevant video

links, and providing clear explanations about why these prerequisites were essential.

Stefan also offered valuable insights by identifying potential issues within my patch and proposing

alternative operators to streamline my future work. One notable example is the inaccuracy inherent in

HaarCascade OpenCV, which could consume a substantial amount of time to rectify. To avoid such

complications, he recommended transitioning to YOLO, as it would be more efficient in the long run. Making

this switch early on would prevent the need to remap values later on, as both HaarCascade and YOLO

required different setups. There were a few hit-and-misses, like when he suggested the use of Mediapipe,

even though it didn’t work for my project, it was still a good reference and learning material.

Armed with the resources and knowledge he shared, my confidence in completing the project soared. I had

been stuck at my current progress for three weeks, teetering on the edge of giving up and contemplating a

completely new idea. Stefan provided me with a step-by-step explanation of how all the components fit

together and the most efficient method to achieve my objectives. I am profoundly grateful for this

newfound friendship, as Stefan's passionate support and guidance went beyond mentorship; he became a true

friend who aided me in pursuing my project with renewed vigor, despite our recent acquaintance.

Stefan's Fiverr Profile

Conversations with Stefan

Scanned Face Cropping in TouchDesigner

Having gone through so many TouchDesigner tutorials on the Learning Day I had last week, I managed to understand some of the interactions between TouchDesigner operators and how they work together. This was an entirely different software from what I’m used to. Honestly, the only analogy I can come up with to describe it right now is a Crafting Table in the game Minecraft - different elements combined produce different results.

On my bus ride home, a breakthrough moment occurred, revealing how to extract bounding box values for detected faces and automate the cropping process using replicators. The alignment in a gallery and cache storage in TouchDesigner also became clear. It felt as if the tutorial insights seamlessly connected in my mind, forming a coherent set of step-by-step instructions.

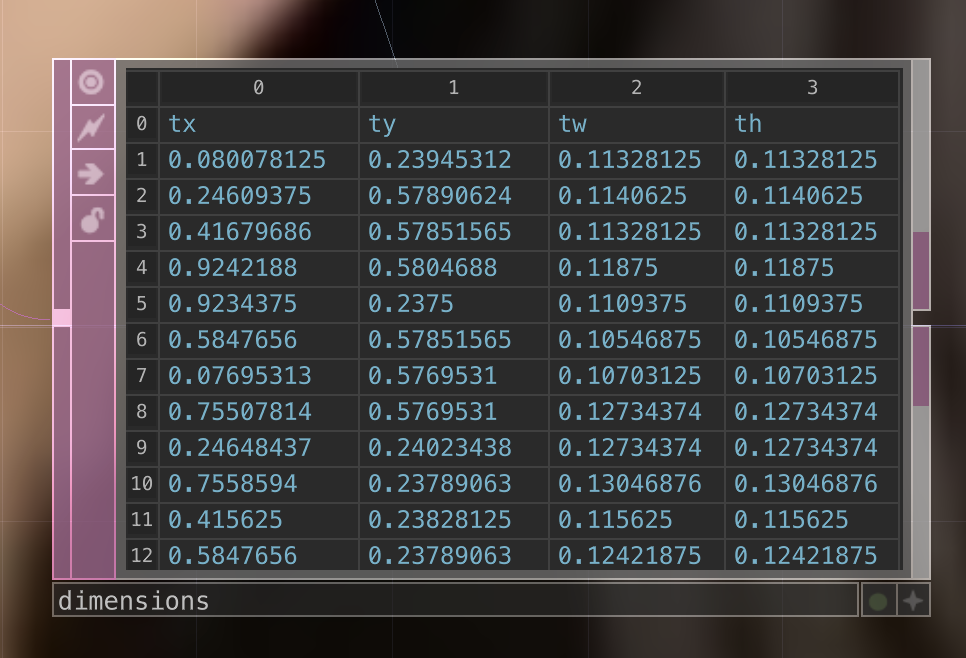

Upon reaching home, I immediately tested my insights. Successfully extracting values into a Table DAT and referencing them in my crop CHOP marked a significant milestone. Although the initially normalized values seemed perplexing, I soon understood that they ranged from 0 to 1.

First I needed to find where exactly the x and y axis points to. In p5, I have the habit of translating the x and y values to the centre of the shape using half the values of the width and height, but I’m not sure if this software calculates it differently. After manually shifting around the crop, I found that the x and y values were in the center of the bounding box, and the width and height values represented the whole diameter of the box.

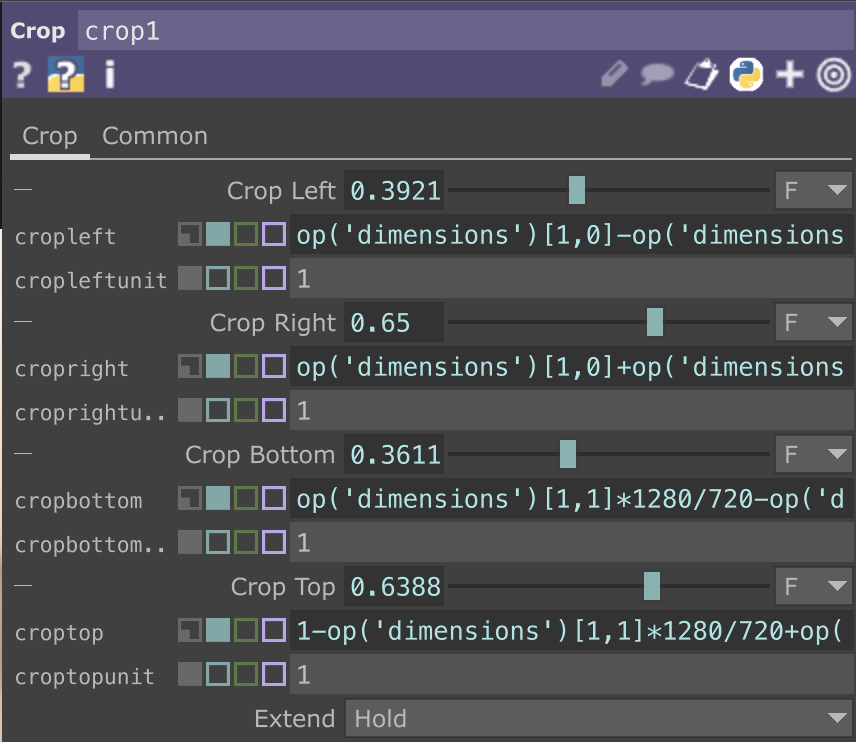

After this point, I’m going to have to write expressions. This is where my prior experience with p5.js comes in. I tried to make sense of some of the expressions I had previously used, and from there, I understood the structure of an expression (where the brackets go, what are the parenthesis for etc.). Following TouchDesigner’s reference library, I figured out how to write the expressions to refer to the values in my Table DAT.

I managed to crop the left and right of the bounding box but ran into some problems with the top and bottom - there was some misalignment with the crop even though I had used the same logic I used for the other sides. After some troubleshooting, I realise it has got something to do with how the python code is written. The way the ty and th values were retrieved was different compared to how the tx and tw values. It had something to do with the aspect ratio of the resolution which I couldn’t figure out how to adapt to my expression.

Despite the top and bottom hiccup, my progress was substantial, I even sent Andreas an email expressing the feeling of accomplishment having gotten over this impasse. In the email, I also sought his expertise to resolve the alignment issue, and he graciously agreed to lend a second pair of eyes.

Extracted tx tw ty th values

Written expressions

Cohort Presentation Feedback

I would describe my experience with the cohort presentation as quite smooth. I felt confident in my research and its presentation. I was under the impression that we were limited to a 6-8 minute presentation, so I intentionally kept my material concise.

However, I must admit that the feedback I received didn't provide as much new insight as I had hoped for. Much of it felt like reminders rather than substantial feedback. While I was particularly eager for suggestions regarding the measurement of algorithm literacy, it appeared that not everyone fully grasped the nuances of my research.

One valuable suggestion came from Gideon, who recommended elaborating further on the ethical dilemmas and illustrating them with real-life examples to emphasize their significance. This is indeed covered in my dissertation, but I had refrained from delving too deeply into these details during the presentation to keep it concise and engaging.

Vikas wisely cautioned me against delving too deeply into the theme of surveillance. While digital surveillance and algorithms are related, they aren't the primary focus of my research. I will heed this advice to maintain the research's focus.

Mimi's feedback reminded me not to overly prioritize the technical aspects of my prototype but to maintain a balanced emphasis on fostering algorithm literacy. This is aligned with my intentions; however, it's challenging to fully convey this without a functional prototype.

Collectively, the lecturers raised questions about using an exhibition as a measure of algorithm literacy. This has prompted me to reevaluate my research objective and whether measurement is indeed necessary. If not, I need to consider what my dissertation discussion should encompass, especially given the impending draft deadline in two weeks.

I also greatly appreciate Yasser's recognition of my efforts to experiment with design for a positive real-world impact. While I acknowledge some bias in my work, my overarching goal is to create a meaningful impact, and I'm pleased that Yasser highlighted this intention.

Mika's Presentation

Dissertation Introduction Update

Reflecting on the cohort presentation, I identified gaps in my introduction that could help the reader understand my research better if I had filled them. Previously, essential information was buried in my RPO literature review due to word count constraints. Therefore, I decided to bring over some of the content I had in my literature review that would supplement the reader’s initial understanding of my research.

Furthermore, I decided to add more justification to the importance of my research. I first started by introducing the existing avenues to foster algorithm literacy and followed it up with the challenges that lie within these avenues. Through these, I identified an opportunity of an alternative my research was proposing. Using speculative design, my research provides education to a broader population demographic, as opposed to the avenue of education in schools.

This adjustment provided a compelling rationale for employing speculative design, lending greater coherence to my research. Previously perceived as a random or experimental choice, speculative design now emerges as a purposeful solution to the identified challenges.

In summary, my refined research introduction now furnishes readers with comprehensive context. It underscores the urgency of addressing algorithmic dilemmas, assesses potential solutions, scrutinizes challenges within existing frameworks, and proposes a relevant and innovative alternative. I find myself highly content with the progress achieved thus far!

Dissertation Literature Review

The literature review in the RPO was just scratching the surface of my research. Now that I have a greater understanding of what I want to achieve. I’ll need to go more in-depth in my literature review to bring out the key points of my research. It was a lot easier to find readings this round of writing since I already had a very clear idea of my research. I broke each research pillar down further into subsections with headers to make the outline clearer. I find that this also helps me to link paragraphs better without having to build up from one section to the other.

Algorithm Dilemmas

For each pillar, I began by prefacing their definition in the context of my research. For this pillar, in particular, I emphasized on the implications of the 3 algorithm dilemmas that are most evident in algorithm curation, providing a brief description of what it is, followed by the societal implications it can have if left unaddressed.

I added another section to add to what I briefly touched on in the introduction, elaborating on the ongoing efforts to address them. This also helps to justify why algorithm literacy is regarded as a promising solution, highlighting the need for individual efforts.

Algorithm Literacy

I added a subsection ‘Factors Influencing Algorithm Literacy Development Among Users’ to the previous literature review I had written in my RPO to separate it from the other areas of algorithm literacy I want to address. I decided to include examples of existing approaches to foster algorithm literacy that would help me to communicate the challenges with these approaches, and the need for a new avenue.

This then led to ‘Psychological Foundations of Algorithmic Understanding’, which brought me closer to the chosen medium of speculative design. In this section, I highlight the concepts that speculative design excels in, further justifying the use of speculative design.

Speculative Design

In my RPO, I outlined the fundamental principles and methodology of speculative design as a catalyst for change. However, owing to word count constraints, I omitted an essential aspect – the efficacy of this speculative approach. Acknowledging the inherent speculation in my goals, I recognized the necessity of substantiating this with a case study illustrating the effectiveness of speculative design in driving actionable outcomes.

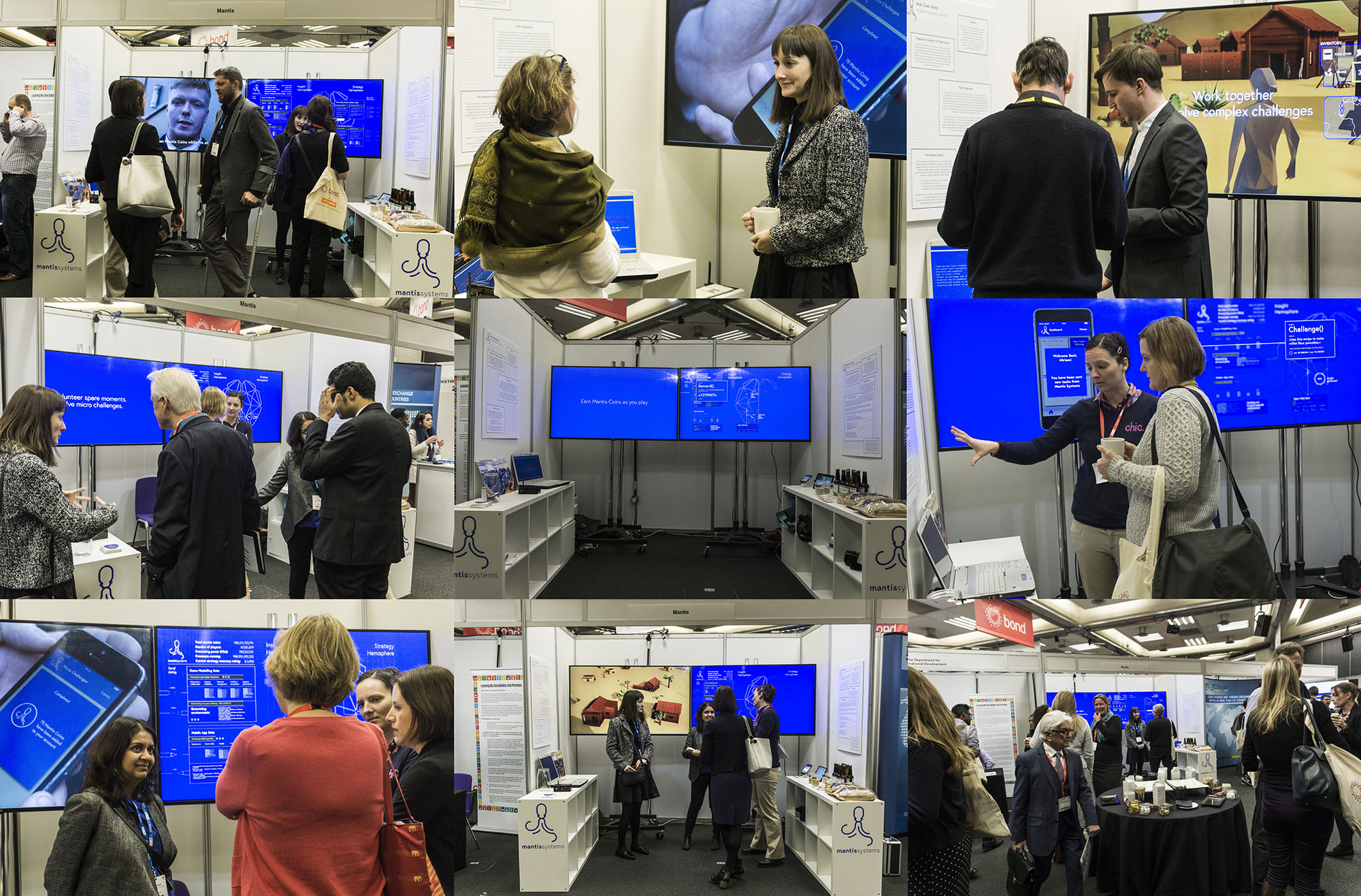

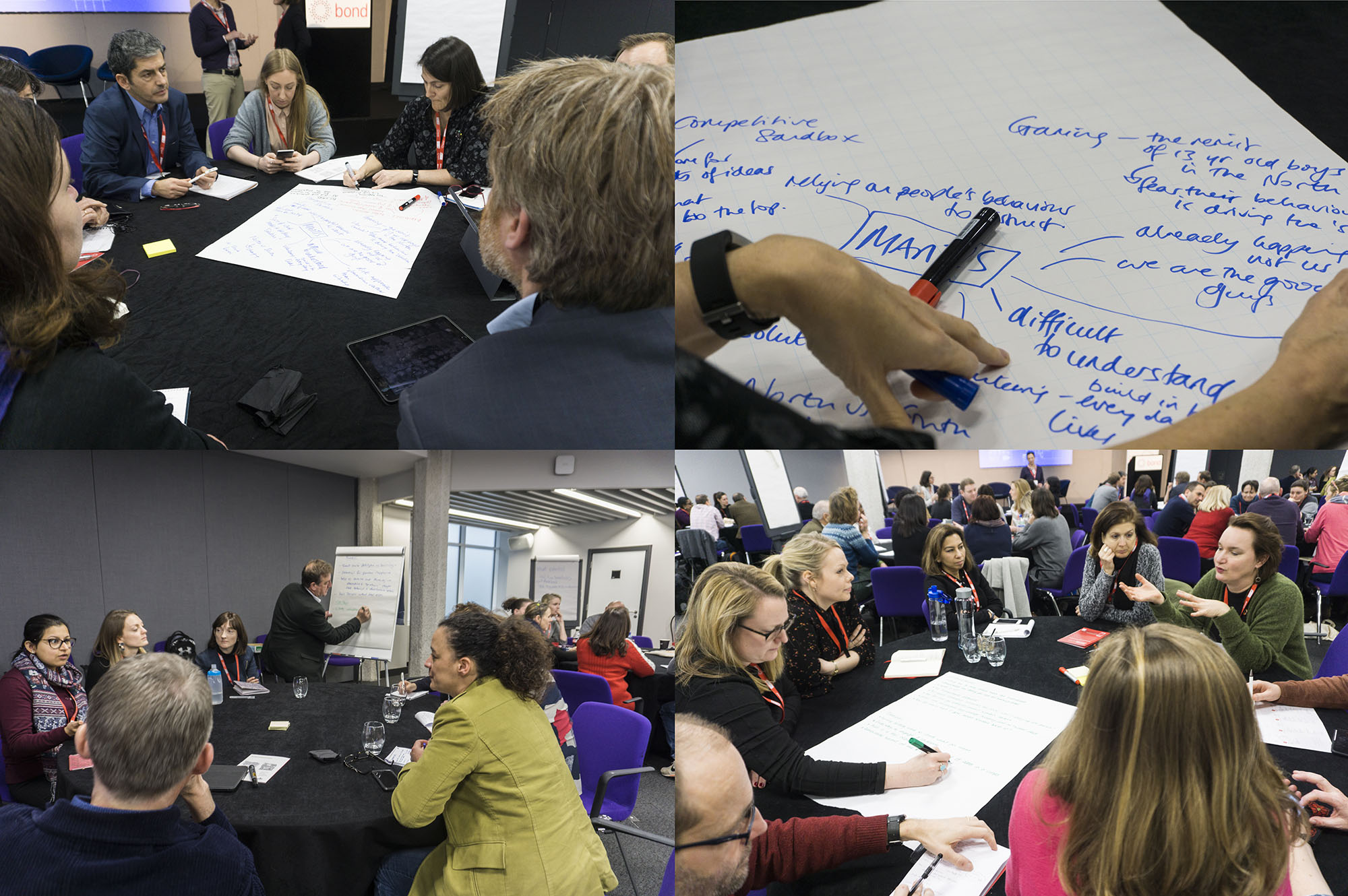

During my exploration of speculative design references, I encountered an intriguing project, 'Mantis Systems' by Superflux. The project's concept seamlessly aligns with my objectives. Although its categorization as a social experiment may be debatable, the researchers employed an infiltration method, deliberately misleading attendees about the authenticity of Mantis Systems. This strategy aimed to elicit reactions, prompt ethical considerations, and stimulate discussions on data privacy and digital ethics. The eventual revelation that the project was not real provided valuable insights used to encourage development leaders to prepare for unpredictable futures.

'Mantis Systems' stands as compelling evidence for the effectiveness of speculative design, serving as a crucial reference for my own project. Incorporating this case study into my research aims to captivate readers and pave the way for a deeper understanding of my chosen approach and methodologies in subsequent sections.

Literature review breakdown

Mantis Systems Conference

Mantis Systems Workshop